Redmon, Joseph Divvala, Santosh Girshick, Ross Farhadi, Ali (2015): You Only Look Once. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. (2016): High-Quality Depth from Uncalibrated Small Motion Clip. Barbara (2017): A Digital Humanities Approach to Film Colors. In: Zeitschrift für Medienwissenschaft, 5, pp. Furthermore, the system will be connected to the Timeline of Historical Film Colors, a comprehensive web resource on the technology and aesthetics of film colors: įlueckiger, Barbara (2011): Die Vermessung ästhetischer Erscheinungen. In a subsequent step the system will be developed into a modular crowd-sourcing platform that enables researchers, students and a general audience to execute their film analyses and to feed the resulting data into the system. This connection, then, allows for systematic queries to investigate and display color patterns in relation to character’s emotional states, narration, milieu, taste or film historiographic data such as year, cinematographers, genres, etc. The results of this automatic analyses are then connected to the results of the human-based analysis by extracting the data from the databases and comparing them to the visualizations that come out of the computer vision algorithm. The algorithm chosen is designed for this exact problem where the camera parameters are unknown. Consecutive frames give crucial information about the relative distance of the pixels to the camera. We accomplish this via a depth-estimation algorithm (Ha 2016) that further localizes characters in the movies. The advantage of YOLO is its fast processing time which might be crucial for the project considering the huge number of movies to be processed.Ĭonvolutional neural networks can easily detect and mark objects in bounding boxes, but extracting complex contours or heat-maps is more challenging. For the project, You Only Look Once (YOLO) object detection is used (Redmon et al.

Since person detection is a well-known problem in computer vision, there are pre-trained neural networks specifically tailored for the task. In the context of this project, objects are the characters of the movies with further potential to expand towards other objects which might be relevant.

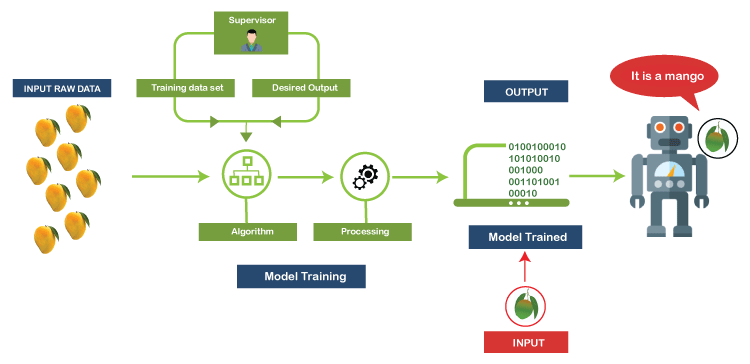

Latest research shows that neural networks are unmatched when object detection is considered.

In order to develop the aforementioned autonomous computer vision algorithm, deep neural networks are leveraged.

COLOR MACHINE LEARNING SOFTWARE

This human-based approach is now being extended by an advanced software that is able to detect the figure-ground configuration and to plot the results into corresponding color schemes based on a perceptually uniform color space (see Flueckiger 2011 and Flueckiger 2017, in press). To this aim the research team analyzes a large group of 400 films from 1895 to 1995 with a protocol that consists of about 600 items per segment to identify stylistic and aesthetic patterns of color in film. The research project FilmColors, funded by an Advanced Grant of the European Research Council, aims at a systematic investigation into the relationship between film color technologies and aesthetics, see Paredes, Rafael Ballester-Ripoll, Renato Pajarola Barbara Flueckiger, Noyan Evirgen, Enrique G.

0 kommentar(er)

0 kommentar(er)